GPT-4o - Multimodal AI Model for Enhanced Reasoning

Product Information

Key Features of GPT-4o - Multimodal AI Model for Enhanced Reasoning

Multimodal AI model for real-time reasoning across audio, vision, and text with improved speed and reduced costs.

Multimodal Reasoning

Seamlessly reason across audio, vision, and text in real-time, unlocking new possibilities for AI applications.

Enhanced Speed

Experience faster processing times, enabling real-time applications and improved user experiences.

Cost-Effective

Reduce costs with GPT-4o's optimized architecture, making it more accessible for a wide range of applications.

Real-Time Processing

Process and analyze data in real-time, enabling applications that require immediate insights and decision-making.

Cross-Modal Learning

Learn from multiple modalities, including audio, vision, and text, to create more robust and accurate AI models.

Use Cases of GPT-4o - Multimodal AI Model for Enhanced Reasoning

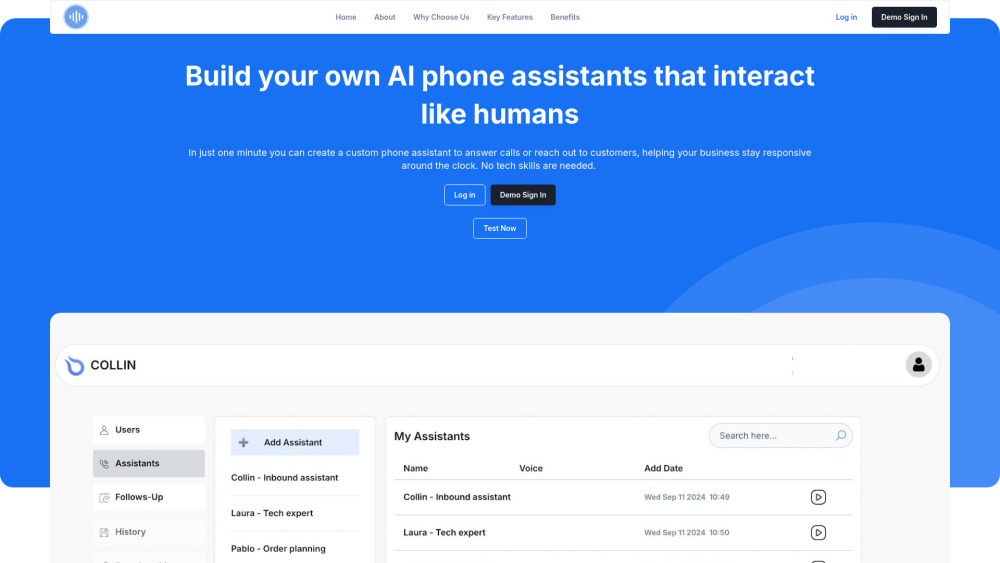

Develop multimodal chatbots that understand audio, vision, and text inputs.

Create real-time analytics tools for audio, vision, and text data.

Build AI-powered applications that require seamless reasoning across multiple modalities.

Pros and Cons of GPT-4o - Multimodal AI Model for Enhanced Reasoning

Pros

- Enables real-time reasoning across multiple modalities.

- Improves speed and reduces costs compared to previous models.

Cons

- May require significant computational resources.

- Limited availability and access to the model.

How to Use GPT-4o - Multimodal AI Model for Enhanced Reasoning

- 1

Access the GPT-4o model through OpenAI's API or SDK.

- 2

Integrate the model into your application using the provided documentation and examples.

- 3

Fine-tune the model for your specific use case using the available tools and resources.